For some years now, I have noticed that medical educators are looking at learning innovations in their own unique way. I first became aware of medical education happening in virtual worlds and simulations, such as Coventry’s virtual maternity ward in Second Life, and St George’s paramedic training in Second Life.

Damian Roland argues for the use of social media in a 26 June debate held at University of Leicester

Our own University of Leicester brought medical students into a virtual Genetics lab as a way of offering additional training in Genetics testing. Dr Rakesh Patel and his team developed a Virtual Ward (still going on today), in which students may visit virtual patients and practice coming up with a diagnosis. When I tweeted about these kinds of initiatives, I would receive replies using the hashtag #meded or #vitualpatient.

But last year I began to see a new one on Twitter: #FOAMed — Free Open Access Medical Education — or just #FOAM — Free Open Access Meducation. I began to follow people like Anne Marie Cunningham (@amcunningham) , Natalie Lafferty (@nlafferty), and Damian Roland (@Damian_Roland), among others who, as medics and medical educators, see the value of using social media in medical education, or the value of blogs, or the value of a crowd-sourced site of medical questions and answers such as gmep.org. Meanwhile, Rakesh was coming up with ideas thick and fast: why not tweet and record the Nephrology conference SpR Club this past April, and the TASME Meeting at DeMontfort University this past May? And so I did!

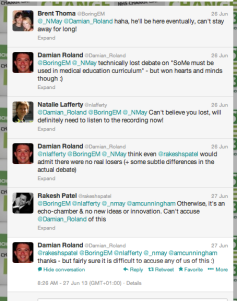

Then Rakesh and Damian got the bright idea to debate the motion: “This house believes that medical educators must use social media to deliver education.” The debate took place on 26 June at University of Leicester, and I was able to live-stream and record it, as well as join in the Twitter discussion. There were several remote participants including one from Canada, in addition to the approximately 20 attendees face-to-face at the Medical School. Not only did the debate spark real interest and a sense of challenge among those present (many of whom seemed to be new to the ideas of FOAMed and social media), the discussion continued on Twitter for a good couple of days, as the images below show. You can listen to and watch the video of the debate here.

Now the ASME Annual Scientific Meeting is happening in Edinburgh, and Rakesh, Natalie, and others are presenting a workshop on FOAM. My name is on the presenter’s list as well, and although I could not attend, I shall be eagerly watching for tweets from the conference. I have come to see, especially through the eyes of my medic colleagues, that Free Open Access Meducation is a better education than closed— better because more information is accessed the wider one’s network is, better because more learners are reached via open platforms than closed, better because open encourages interdisciplinary sharing and learning… the list of benefits goes on.

Now the ASME Annual Scientific Meeting is happening in Edinburgh, and Rakesh, Natalie, and others are presenting a workshop on FOAM. My name is on the presenter’s list as well, and although I could not attend, I shall be eagerly watching for tweets from the conference. I have come to see, especially through the eyes of my medic colleagues, that Free Open Access Meducation is a better education than closed— better because more information is accessed the wider one’s network is, better because more learners are reached via open platforms than closed, better because open encourages interdisciplinary sharing and learning… the list of benefits goes on.

Terese Bird, Learning Technologist and SCORE Research Fellow