An interesting quesion arose from my ALT-C talk last week. It was basically “How can you use Second Life for teaching when it takes two hours to learn how to use it?”

Which isn’t really a question, of course. It’s a statement. Along the lines of “It takes my students two hours to learn to use Second Life”.

So, here’s a question in reply: Do you expect your students to be able to use MS Word? Yes? Including MailMerge? Macro programming? I suspect not. They probably just need basic formatting. Maybe headings. An index for the really advanced. And it’s the same with learning to use Second Life. Thirty minutes training is all that’s needed for most learners in Higher Education.

The key is to consider training as part of the overall design. Here’s what we did for SWIFT.

1) Define the Learning Objectives. For our second lab it was to practice evaluating experimental results and to learn the connection between theory and practice.

2) Design activities that will best support those Learning Objectives. In our second lab, the activity was to work through a sequence of experimental steps and results, answering quesions about procedure, interpreting results and seeing animations of molecular processes at critical moments.

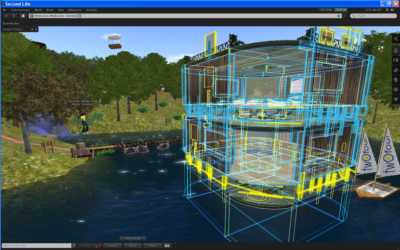

3) Design the environment necessary for those activities. We created individual lab benches with replica equipment, and a Head-Up Display that acted as the automated guide.

4) Define the SL competencies necessary to accomplish those activities. So,

a) Walk – well enough to position the avatar in one place

b) Close the sidebar

c) Touch (click on) objects

d Chat

e) Zoom the camera in on one spot

f) Put on / remove a lab coat

g) Attach the HUD

Now, most of these only need to be done once, and some will already be understood (like clicking on things) so there’s no need for lots of practice. All that learners really need to be good at is zooming the camera. So the 30 minutes is something like 10 minutes for the easy things, 10 minutes for the lab coat and 10 minutes for the camera.

5) Create or adapt a training area suitable for learning and practicing those skills (and only those skills, so the training area may need adjusting for different groups). There are many training areas in SL, some better than others. Ours is here. Basically, the avatar needs to be constrained until they can walk properly, instructions must be very clear to all, and tasks must be in a logical progression. We have adjusted our training area over the last 12 months using observation and in-world interviews and questionnaires.

And that’s it! We don’t teach them how to run, fly, IM, search, teleport, build, offer friendship, use weapons, drive vehicles … there’s quite a list, and if they choose to continue using SL in their own time and outside of the University island they will probably want to use many of these. And they may need MailMerge in MS Word for running their own business…

So, ask learners new to SL to sign up for an SL account on the web site in advance. Then in the class, when they first use SL, ask them to enter the location of your training area at the SL login screen (so they don’t wander round some public place) and the half-hour training will pretty much run itself. (Yes, really, you just need someone hovering to help the occasional student who uses existing knowledge or expectation in place of the instructions.) We would expect similar success with OpenSim implementations, but can’t speak from experience with these.

How well the actual lesson goes depends on many things, from what’s to be learned and how that’s represented in the virtual world, to how well the environment is built and how motivated the students (and teacher) are. Some things can be learned well in virtual spaces, others not. Some virtual world use is embarked upon with enthusiasm, some not. What we can say with some certainty though, is that SL training need not be a problem.